Direct X 3D 11 Graphics Pipeline

The rasterizing pipeline.

DirextX is used to for the cpu with the gpu to communicate with each other. The CPU uses C++ while the GPU uses HLSL.

Vertex Shader: deals with the mesh

Tessellation stage : identifies which pixels are going to be rendered

Rasterized stage: decides what color each pixel has

Input-Assembler

- entry point

- assembles Data into primitives

- gets the data from the cpu to start

- receives: Vertices from Vertex Buffers (bulk of memory of vertices), indices from index buffers, Control Point Patch Lists

- outputs: primitives

Vertex Buffers

Each vertex contains at least a point position (you have one point, but that point has 3 vertices for each connected polygon.

They could also contain color, texture, and normal information

Input layout - a templates or organizational formats you need to conform with to work with the engine.

Index buffers

- feed indices to input assembler.

- saves memory and speed

- indexes of positional information

- a face of a cube has 4 vertices, a cube has 6 faces so that's 24 vertices.

Doesn't seem that big of a saving, but it adds up when you have additional information on the vertices other than just position.

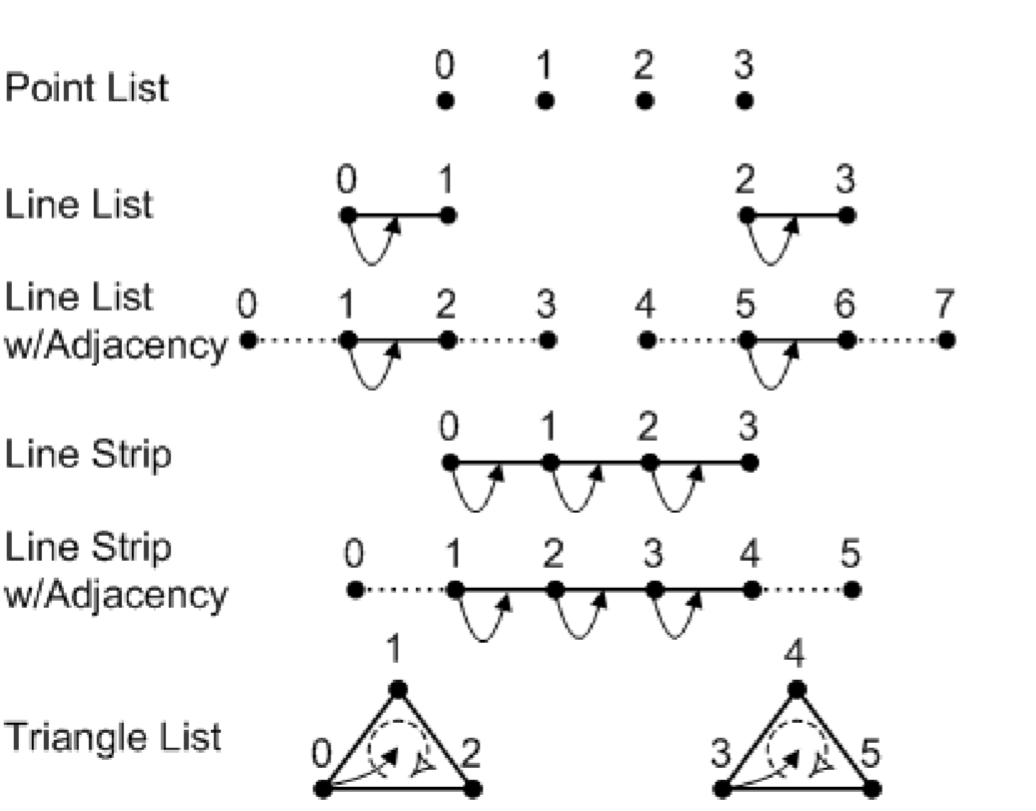

Primitive Types

Primitive is a topology defined in Vertex Buffer

▪ Types:

– Point List

- line list with adjacency (a way of representing a convergence series. You may need a a pre point or a post point)

– Line List

– Line Strip

– Triangle list

– Triangle Strip

– Control Point Patch Lists

▪ Needed Topology for tessellation stage

Creating a context buffer. Used to store context variables. You want few draw calls as possible. You want as few as possible.

Vertex shader: transforms every vertex from object model space to screen space

SEMANTICS: PLACES AFTER VARIABLES. It tells the system how to treat the variable. For the vertex shader you need the semantic SV_Position

hard to integrate tessellation in unreal engine.

Geometry shader can add and subtract vertices (hair, grass, fuzz) they're kinda clunky and expensive so not used as much (unity is easy to use for this)

Stream-Output Storage

rasterization- flattens 3d into 2d image- a string of bits

interpolation of pervertex data - uses bilnear/tri linear interpolation

output-merger/blending stage

takes two passes: the old one and the new one and sees what has been occluded.

Technique - how to put the stuff together

making structs - similar to classes but more primitive. We can use them later.

VS_OUTPUT is a struct as float4 position. This is casting it as a VS_OUTPUT type with initialized with all values as 0

To comment, you can use // for a single line, or /**/ for multiline comments.

Preprocessor

at the beginning you define these. If the compiler finds any instances it immediately replaces it

float 4x4 and matrix mean the same thing

HLSL Texture Usage

First you need to declare a texture object - the image. Then you need to declare + initialize the texture sampler, how it's going to sample the image. And then you sample the texture using declared sampler. (most of the time you put them together in the pixel/fragment shader)

Texture Filtering

Magnification: When there are more pixels on the screen than there are texels. Screen window is larger than texture map

Minification: When pixels cover up more than one texel. More complicated than magnification. MipMapping

Texture Filtering

for magnification

Point Filtering: Use the texel colors closestto pixel center. Fastest Technique. Lowest Quality (blocky)

Linear Interpolation: Use of bi-linear interpolation. Slower but smoother.

Anisotropic Filtering: Good for object oblique to camear. reduced distortion and improves rendered output. most expensive and best looking

For texture minification; the filter could be used but is more complicated. ignored texels produce artifiacts and decrease rendering quality. down-sampled images would be better. MipMapping- instead of using a texture map that is larger, it produces smaller maps, each submap is half the size of the previous one- prebaked not real time.

Texture Evaluation: Point Filtering selects nearest Mip Level OR LInear Filtering selects two surrounding mipmaps. Selected mipmap is sampled with point, linear, or anisotropic filtering.

No comments:

Post a Comment