Thursday, 18 September 2025

UE5 Niagara Notes

The Basics

- Systems

- a system is a container that holds your effect. You can apply settings on the system that affect everything inside it.

- Has a timeline panel in the system editor that shows emitters and can also manage it.

- Emitters

- where particles are generated

- controls how particles are born, what happens when they age, and how they look and behave

- organized in a stack, inside of which is several groups, inside which you can put modules to do tasks

- These groups are:

- Emitter Spawn:

- what happens when an emitter is first created

- defines initial setups

- Emitter Update:

- defines modules that occur every frame on the CPU

- used to define spawning of particles when you want them to continue spawning on every frame

- Particle Spawn:

- Called once per particle when it is first born

- defines initialization of the particles, such as birth location, color, size, etc

- Particle Update:

- called per particle on each frame

- use to define anything that needs to change frame-by frame as the particles age (ex: if particles color/size change over time, or if particles are affected by some type of force).

- Event Handler:

- create generate events in one or more emitters

- then create Listening events in other emitters to trigger a behavior in a reaction to a generated event

- Render:

- define the display of particles

- use mesh renderer if you want to define a 3D model as the basis of your particles

- use a sprite renderer if you want particles as 2D sprites

- many other renderers to choose from

- These are color coded so that anything green is related to the emitter, and the red is anything related to the particles

- Modules

- building blocks of effects

- add modules to groups to make a stack

- processed sequentially from top to bottom

- Think of it as a container for doing some math, you pass in some data, then write that data out at the end of the module

- Modules are built using HLSL or using the node editor.

- double click any module to look at the graph inside

- scripts start with getting an input and at the end writing that output back out so modules further down the stack can use it

- Parameters

- four types of parameters:

- Primitive: numeric data of varying precision and channel widths

- Enum: fixed set of named values

- Struct: combined set of primitive and enum types

- Data Interfaces: defines functions that provide data from external data sources

- add custom parameter module and then you can add new parameters to it.

Niagara Asset Browser Window

- Content Area

- Categories

- ToolBar (with the filter and Search bar)

- Details

The VFX Workflow

- Create a system to hold and adjust the properties of your emitters

- Create emitters inside your system.

- Create Modules inside your emitters

- each module, emitter, system you create uses resources. To conserve resources, try to see if you can accomplish what you want with the things you've already created.

Resources

Notes on Specific parts of the Niagara System

Particle Spawn

Initialize Particle

Lifetime mode

Position Mode

Particle Update

Drag

Curl Noise Force

Point Attraction Force

Vortex Force

Point Force

Wind Force

Houdini Workshop - Weeks 1-4

One of the things discussed in the workshop is the idea of levels of noise. If you want something to be realistic in VFX, you need 3 levels of noise to make it believable. Big, medium, and then small, with the amplitude of the noise getting smaller as you go through each level.

Voxels were also discussed, you can think of them as 3D Pixels.

Starting out

First we need a curve node to draw the outline of the island. The curve node is a similar tool to Photoshop's pen tool. Once the curve is complete, we need to change the normals, as they are currently facing down away from us. Make a reverse node to show the Normals right side up. If we don't do this step it wouldn't render correctly. Finally, add a polyExtrude node to give it some depth.

Making Volcanos

|

| Change the first input box for radius to change the top radius |

Once you've finished making enough mountains, you'll need to combine them all, including your island shape into a Merge node.

Heightfield Mask by Geometry

The mask is now finished and we can now move onto adding noise to the terrain.

Adding Noise

Insert the heightfield project node into the first input of the heightfield noise node, with the second input coming from the mask we just made. Change the parameters to:

- Noise Type: Worley Cellular F2-F1

- Amplitude: 200

- Element size: 810 (this is the scale of the noise)

- Turn off "center noise: (noise is actually 4 dimensions of numbers, center noise means the noise starts in the center of the scene, aka the origin)

Additional Resources

Saturday, 26 July 2025

Wednesday, 16 July 2025

Wednesday, 2 July 2025

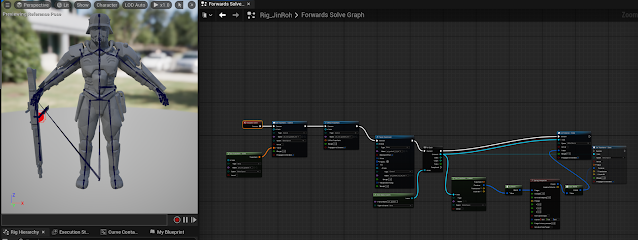

Common Art Sprint #4 Delivery

Responsible for:

- updating the jinroh model with the newest model

- updating gun model with new uvs

- adding ik controls onto the ammo gunbelt to allow for additional control for the animators and to animate it draped over the arm

- added twist joints for the arms

- added additional controls for the arm braces, kneepads, backpack, and new gun holster

Wednesday, 18 June 2025

Common Art Sprint #3 Delivery

Responsible for:

- General bug fixes and updates to the rig

- adding more controls to the gun, specifically for the ammo belt

- implemented dynamic spring interpolation for the gun belt inside a unreal control rig

- researched into dynamic joint chains in maya for animators to simulate with and have more control over it

Easy Notes Archive

Tech Art Blueprints Demo Animation State Machines Part 1 Animation State Machines Part 2 Math for Tech Art Part 1 Math for Tech Art Part 2 ...

-

Tech Art Blueprints Demo Animation State Machines Part 1 Animation State Machines Part 2 Math for Tech Art Part 1 Math for Tech Art Part 2 ...

-

Quick and Fast Instructions Create your NURBS curve Put your Curve into an empty group (Ctrl + G). Make sure you freeze and delete history ...

-

Resources Stop Staring By Jason Osipa Art Of Rigging – Kiaran Ritchie Muscles of the Face You'll want to conform to the anatomy of ...